Edge AI computing has been a trending topic in recent years. The market was already valued at $9 billion in 2020, projected to surpass $60 billion by 2030 (Allied Market Research). Initially driven by computer vision systems (particularly for use in autonomous vehicles – no pun intended), the proliferation of other edge applications, such as advanced noise cancellation and spatial audio, has further accelerated the growth of the Edge AI computing Market.

Whether computation takes place in the cloud or down at the edge, data protection remains vital to ensuring the safety of AI system operations. Integrity checking and secure boot/update, for example, are undoubtedly mission-critical. Furthermore, for applications that have a direct bearing on people’s lives (such as smart cars, healthcare, smart locks, and industrial IoT), a successful attack would not only affect the safety of that application’s data set but also potentially endanger lives. Imagine the case for a smart camera surveillance system, when intrusions and hazards wouldn’t be inferred and detected correctly if a hacker manages to alter the AI model or the input streaming images.

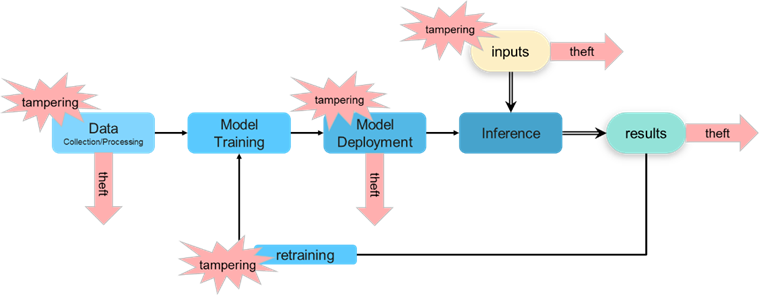

Unlike a standard CPU, which is ready to use out of the box, a neural network needs to be taught how to make the correct inferences before being put to use. Therefore, AI system creators must include the training stage when planning for system security. This means that besides the hardware itself (including the neural network accelerator), other related attack surfaces need to be considered. These include:

- tampering/theft of training data

- tampering/theft of trained AI model

- tampering/theft of input data

- theft of inference results

- tampering of results used for retraining

Careful implementation of security protocols using the cryptographic functions of privacy (anti-theft and anti-tampering) and integrity (tampering detection) can mitigate against such attacks. A diagram of the training thru implementation stages of an Edge AI system is seen below, along with the above-mentioned threats from tampering and theft:

Since the entire training process requires a non-trivial amount of time and effort for the collection of training data (and for the training time itself), theft or tampering with the trained model represents a significant loss of company resources/proprietary knowledge. So given the importance of security for Edge AI SoCs, plus the broad scope of attack surfaces available to a determined hacker, it comes as no surprise that today’s designers must make security a primary consideration from the beginning of the design cycle. And just as AI systems are continually evolving, security for such systems also needs to improve in step, to be able to continue fending off more sophisticated attacks as the complexity of AI systems grows over time.

Before discussing how to protect Edge AI SoCs, we must first understand how attacks could happen. There are a number of writing discussing software-based attacks and countermeasures. Meanwhile, attacks directly on hardware also have become popular. The grand prize is the secret key stored in the SoC since many designs still store the keys in the unsafe e-fuse one-time programable (OTP) memory. Data in e-fuse OTP could be extracted with TEM or SEM. The attackers could employe side-channel attacks (SCA) on designs without proper anti-tamper designs to prize out the key. Or could break the boot process with a tampered boot code and take over the system.

Learn more about why Anti-Tamper design is important here

A common first step towards improving a system’s security is replacing efuse-based OTP memory with anti-fuse OTP. The next, intermediate step would be installing a root-of-trust, which typically includes protected storage, an entropy source (utilizing a PUF and/or TRNG), and a unique identifier (acting as the foundation for a secure boot flow). The final step would be the integration of a full-featured crypto coprocessor or secure element running in its own protected enclave or trusted execution environment. A secure element such as this is capable of performing all security/crypto functions inside its own, trusted zone of protection.

Integrating additional security modules into a single system is an ever-present challenge for designers. Design architects tend to choose the easier and more complete solution to integrate when either refreshing an existing product or developing an entirely new product line.

As the sizes and feature sets of such security modules/IP will vary depending on the selected degree of security chosen, integrating multiple IPs is basically inevitable with first-generation designs. This often leads to security being considered from a top-down perspective, beginning with crypto algorithms, and ending with key storage as the last piece in the puzzle. However, this approach raises two major concerns:

- creating and maintaining a security boundary covering multiple IPs

- additional integration effort when IPs are from different suppliers

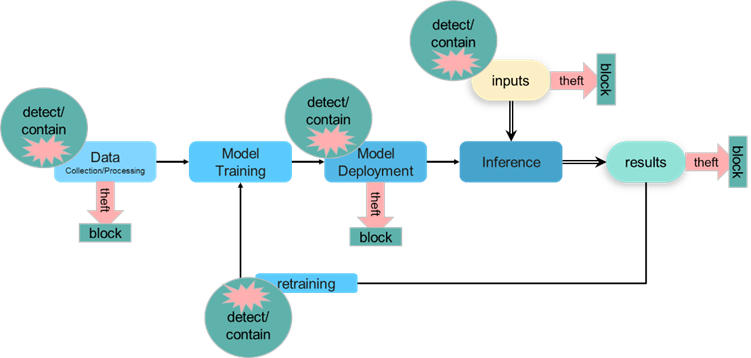

Ease of integration remains important when adding security to any system, not just for an Edge AI SoC. However, since edge devices are deployed in the field (and in many cases reliant on batteries), power consumption is a unique and important consideration for Edge AI SoCs. Unfortunately, this is in direct opposition to the push for more sophisticated functions (related to computation) that need to be run at the edge. Therefore, utilizing more advanced process technologies has become an attractive option for those seeking greater performance and lower power consumption. Current market trends show that more and more Edge AI SoC designs have adopted 12/16nm process nodes, striking the sweet spot between computation power, current consumption, and cost. Once fully integrated, PUFsecurity’s PUFcc provides the necessary protections against the aforementioned threats, blocking theft and detecting/containing attempts at data tampering:

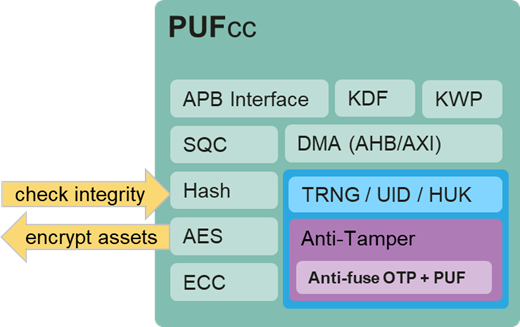

PUFsecurity’s PUFcc secure crypto-coprocessor IP addresses both of the major issues previously raised. PUFcc is based on a hardware root of trust (HRoT) that includes secure storage and entropy sources, then adds on NIST-CAVP and OSCCA certified crypto engines, all wrapped into an anti-tamper shell. Able to offload those secure operations that would otherwise burden the main core, the addition of PUFcc leads to improve overall system performance and lower power consumption. The architecture of PUFcc is shown below, highlighting the specific blocks designed to contain and detect those threats detailed in the previous figures:

PUFcc’s hash engine generates a digest used for integrity checking to detect tampering, or can be used as a basis to create a message authentication code (MAC or HMAC) to perform the same function. And to protect against tampering and theft, a cipher engine supporting NIST’s advanced encryption standard (AES) encrypts important AI data and models, rendering them unusable and impenetrable to hackers without the proper key.

Not only does PUFcc help Edge AI achieve secure operations with its low power requirement by offloading CPU’s crypto computations to the hardware accelerators, it also equips with industry-standard bus interfaces (APB/AHB/AXI) for hassle-free, drop-in integration into existing SoC designs. This allows for minimal security IP design-in time, so that designers may start on system verification as soon as possible. Already proven by multiple customers on silicon, PUFcc adds security while keeping power consumption to a minimum, two goals which no longer need to be mutually exclusive.

Learn more about how HRoT plays a pivotal role in security

With the addition of a hardware secure crypto coprocessor, the power of Edge AI can be unleashed with even greater protection from malicious attacks. By having the system security’s foundation based on hardware, at the same level as the SoC, protection starts from the device layer, goes through the operating system, and finally covers system applications at the software layer. This is how tens of billions of Edge AI, IoT, and other connected devices are poised to securely emerge into our lives, safely and well protected, right from the start.