As more and more governments and private sectors embark on standardizing quantum cryptography, the era of quantum computing seems imminent. In the face of this new wave, it is imperative to equip ourselves for the forthcoming challenges and opportunities thoroughly. This article will cover some basic concepts of quantum computing, how quantum computing is related to cryptography, and why preparing for post-quantum cryptography (PQC) is important. The conclusion of the article then provides some perspectives on the transition to PQC and how these fit in with overall system security.

Let’s start with a brief look at the historical evolution of quantum computing. The concept of quantum computing (QC) was first introduced as Paul Benioff described the quantum model of computing in 1980. In 1994, Peter Shor’s quantum algorithm for factoring large integers was published, sparking interest among scientists and mathematicians. Later, Messrs. Nakamura and Tsai demonstrated a working qubit using a superconducting circuit in 1999, followed by D-Wave announcing the first commercially available quantum computer just after twelve years. In 2016, inveterate cryptographers might feel the call to action when the US National Institute of Standards and Technology (NIST) issued its request for nominations for its post-quantum cryptography (PQC) standardization program. Subsequently, the National Security Memorandum (NSM-10) was released in 2022, also urging the US to mitigate the threats to our current cryptographic protocols that a cryptographically relevant quantum computer (CRQC) represents. As of 2023, IBM launched the first quantum computer with more than

1,000 qubits.

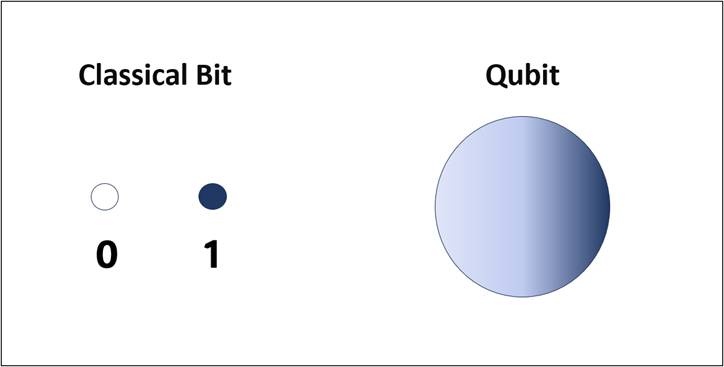

Before exploring further, we need to first understand the main difference between classical and quantum computing. Classical computing processes data in the form of binary bits, which can take the value of 0 or 1, whereas quantum computing uses particles that are in a quantum state called “qubits”. Because the superposition property of quantum mechanics applies to qubits, they can represent 0, 1, or any value in between (until measurement, whereupon the quantum superposition states will collapse) as shown in Figure 1. Then by taking advantage of other quantum mechanics properties such as entanglement, quantum algorithms can perform certain calculations much faster than it would take to complete on a classical computer. For example, Google claimed quantum supremacy in 2019 by performing a series of operations in the span of 200 seconds on their quantum computer, and that they claimed would take a classical supercomputer 10,000 years.

Challenges on the Horizon

This speedup of certain algorithms running on a cryptographically relevant quantum computer (CRQC) is what has modern cryptographers worried about the security of those public-key crypto algorithms used for digital signatures and key exchanges. In a nutshell, most of the public-key crypto algorithms currently in use are now labeled as “CRQC-vulnerable” since they rely on functions which are easy to compute in one direction, but whose inverse is quite difficult to calculate. Because a CRQC will make short work of finding the inverse to these “one-way” functions, we can no longer count on the long computation times of finding the inverse to these functions to provide the guarantee of security for these algorithms. For more details, a complete list of CRQC-vulnerable algorithms is shown in Appendix B of the US Office of Management and Budget’s Memo M-23-02 on “Migrating to Post-Quantum Cryptography”.

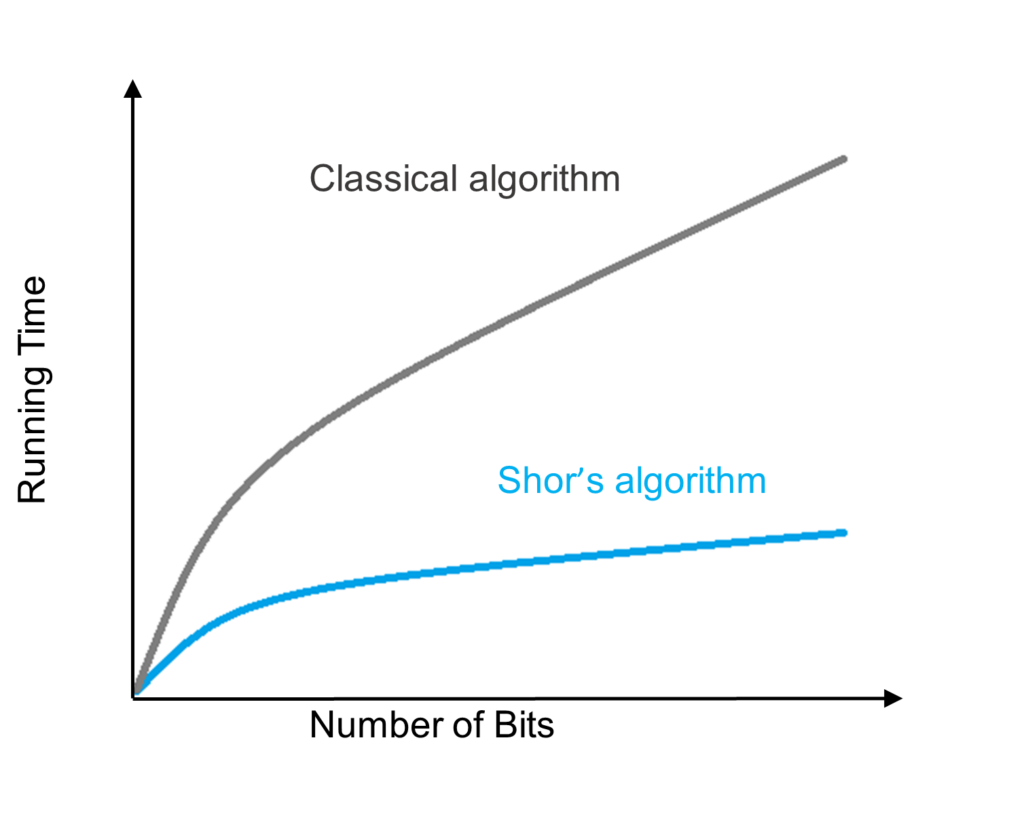

For example, one of the most popular public-key cryptosystems in use today, Rivest-Shamir-Adleman (RSA), relies on the difficulty of factoring the product of two large primes. The fastest algorithm for classical computers is called the general number field sieve, while the fastest (known) one for quantum computers is Shor’s, whose speedup has been estimated to be of a polynomial magnitude. In layman’s terms, if we assume it takes X amount of time for Shor’s algorithm to find the factors of a large integer, then the classical algorithm will take a polynomial of X in time, such as X2 or X3 or other. To simplify this example even further, if Shor’s algorithm takes 100 seconds to find the factors of a large integer, then the classical method could take 1002 (10,000) seconds or more (See Figure 2).

Because CRQCs will be able to perform such classically computation-intensive problems in much less time, there have already been calls to action from numerous governments and organizations to quickly develop post-quantum cryptography (PQC) standards that are based on other mathematical theories, such as lattice-based cryptography. The even more cautious in the industry are pointing to “harvest now, decrypt later” (HNDL) attacks as a further incentive to adopt PQC algorithms as soon as possible. The claim is that bad agents are currently archiving public-key algorithm-protected data in the hopes that future CRQCs will be readily available to help them with decryption.

The “Noisy Intermediate Scale Quantum” Era

Since quantum computing is in its intermediate stage of development, called the “noisy intermediate scale quantum” (NISQ) era, there are still many issues that researchers have not fully agreed upon. One example is what the optimal architecture of a quantum computer should look like, with such competing ideas as ion trap versus superconducting or neutral atom. So, it should come as no surprise that there is also little consensus as to when we can expect the first CRQC. Estimates range from “there is already a CRQC in operation at a top-secret lab” to “probably never”, with the majority guesstimating a future day between five to fifty years from today. If we use qubit count as a measure of performance, a 2021 paper from Google estimated that it would take a 20 million qubit quantum computer to be able to crack a 2048-bit RSA within 8 hours. IBM’s Osprey processor represents the current state-of-the-art at 433 qubits, with their Condor processor expected to break the 1000 qubit barrier in 2023. Assuming this goal can be achieved this year, it is still difficult to estimate when a qubit count in the millions may be achieved.

However, the experts are yet again divided in opinion as to what the minimum number of qubits would qualify a quantum computer as being “cryptographically relevant”. The 20 million qubit figure selected by Google is still only an estimate. In addition, this number will also depend on what quantum algorithm is chosen. For example, in 2022 researchers from China published a paper proposing their own integer factoring algorithm, which claimed that only 372 qubits are needed to break a 2048-bit RSA key. This paper used their success in factoring a 48-bit integer with just 10 qubits as proof of concept. But with the experts finding it difficult to agree upon various quantum computing issues such as architecture and minimum CRQC qubit counts, they agree about the necessity of transitioning to PQC.

Embracing What We Can Accomplish Now

At this point, it is worth the effort to examine the recommendations for moving into the post-quantum world, even though the first available CRQC is at least several years away. Thankfully, the proposed roadmaps for a smooth PQC transition have many similarities, even those from the public and private sectors. Summarized from the guidelines and positions issued by the US Government (including the DHS/NSA/NIST), ANSSI (France), GlobalPlatform, NCTA (National Cable and Telecommunications Association), DigiCert, and the EPC (European Policy Centre), the following key points came up:

- create an inventory of critical data at risk;

- create an inventory of existing cryptographic systems at risk, particularly public-key algorithms such as digital signatures/key exchange;

- consider how long the at-risk data is to be protected, and how much exposure or shielding the system has from external systems;

- check-in with/engage the standards organizations regarding the latest PQC updates, such as the NIST;

- create a plan/timeline for transitioning to PQC;

- stay crypto-agile, consider the possibility of implementing a phased migration to PQC, starting with hybrid mechanisms

- alert and educate staff members of the transition to PQC and schedule timely training sessions

All the above tasks can be started regardless of the new PQC standard finalization. Most organizations can already begin preparations for their journey to a post-quantum world. But it is important to keep in mind that the ultimate goal is to maintain the same level of protection that we enjoyed in the era of classical computing in the post-quantum world. To focus on total security, we will conclude with some perspectives on scope, time, and trust.

Switching to PQC will be a major undertaking, but the scope of this change won’t affect all our familiar, classical cryptographic algorithms. Since the speedup of CRQCs is limited to a select number of calculations such as integer factorization, at present only our public-key algorithms are threatened by CRQCs. For example, the current NIST-recommended AES-256 cipher and SHA-384 hash algorithms are still acceptable to use in the post-quantum world. As an aside, a security solution provider is technically correct when claiming that they are post-quantum compliant when touting their advanced AES implementations.

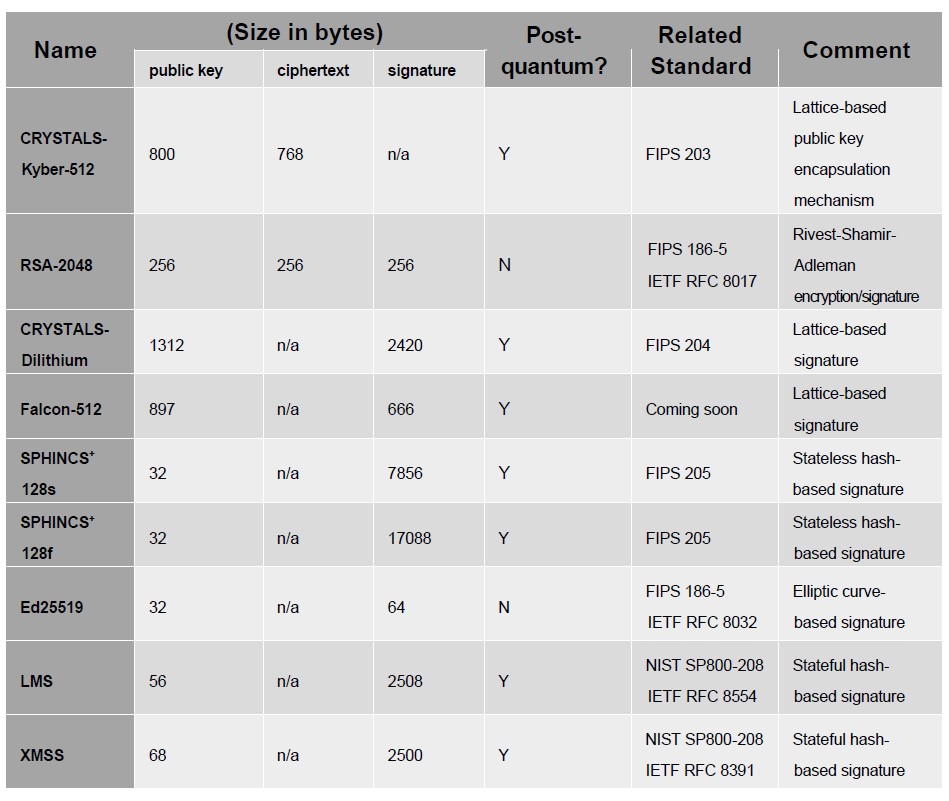

The perspective of time refers to understanding that the transition to full PQC compliance will span many years. Take the data encryption standard (DES) and triple-DES ciphers as examples – deprecated in 2002 and 2017 respectively, there are still systems currently using these legacy, but outdated methods of encryption. In addition, the National Security Memorandum (NSM-10) is allowing organizations up to ten years to complete their transition to PQC, with the timer starting only after the winners of the final round of NIST’s PQC Standardization are announced (expected in 2024). The most recent results from the third round are shown in Table 1 below, listing those algorithms that have already made the cut and will become NIST standards. The table also compares these soon-to-be standards with their classical counterparts in terms of public key and ciphertext/signature size (in bytes):

During this long period of transition, it is vital to stay crypto-agile. Any systems built today need to stay flexible enough to account for possible modifications in the future by understanding that what may appear quantum-safe today may not be so in the future (see the former NIST front-runner SIKE as an example, which was in fact broken by an older, classical computer). Hence, many experts have recommended starting a conversion to PQC with transitory hybrid solutions that combine both classical and PQC algorithms, such as those already employed by companies such as Cloudflare, Amazon, IBM, and Google. For example, the latest version of Chrome 116 now uses a combination of the classical X25519 key agreement and quantum-safe Kyber768 algorithms to create a TLS session key that is strong enough to resist both classical and quantum cracking attempts.

The Escalating Importance of Hardware Roof of Trust (HRoT)

With the continuous evolution of quantum computing, security concerns and levels should advance accordingly, thus requiring more robust safety storage solutions. For example, NeoPUF, a hardware security technology based on physically unclonable variations, will be more powerful than eFuse in meeting high-security demands. The security of a system always rests upon the foundation of trusted and secured keys, regardless of the existence of a CRQC. Without a trusted way to generate and protect private keys, system security simply cannot be guaranteed. At a minimum, important system keys (such as a hardware unique key, or HUK) need to be highly random so that they are unable to be guessed, as well as securely stored to protect against hacking and theft. Hence, the same classically secure methods for creating trust in a system, such as through a hardware root of trust (HRoT), will continue to be used in the post-quantum world.

As all evidence and trends point to that the software root of trust alone is no longer sufficient, an even stronger base of trust must be anchored in the hardware. Employing an HRoT with an all-internal key provisioning system will eliminate exposure (key leakage) during root key generation by removing the traditional, external key creation and injection process. And since the most robust form of such internal provisioning is PUF-based, it is strongly recommended to choose a PUF with highly desirable features such as near-ideal entropy and minimal processing overhead (least/zero amount of required helper data). Finally, to make integration and implementation easier, choosing a solution provider with a proven track record of delivering trust on multiple foundry platforms such as eMemory and its subsidiary PUFsecurity is still our best bet, now and moving into the post-quantum world.